Running Locally¶

You have two options:

- Use the Dev Container. This takes about 7 minutes. This can be used with VSCode, the

devcontainerCLI, or GitHub Codespaces. - Install the requirements on your computer manually. This takes about 1 hour.

Development Container¶

The development container should be able to do everything you need to do to develop Argo Workflows without installing tools on your local machine. It takes quite a long time to build the container. It runs k3d inside the container so you have a cluster to test against. To communicate with services running either in other development containers or directly on the local machine (e.g. a database), the following URL can be used in the workflow spec: host.docker.internal:<PORT>. This facilitates the implementation of workflows which need to connect to a database or an API server.

You can use the development container in a few different ways:

- Visual Studio Code with Dev Containers extension. Open your

argo-workflowsfolder in VSCode and it should offer to use the development container automatically. VSCode will allow you to forward ports to allow your external browser to access the running components. devcontainerCLI. Once installed, go to yourargo-workflowsfolder and rundevcontainer up --workspace-folder .followed bydevcontainer exec --workspace-folder . /bin/bashto get a shell where you can build the code. You can use any editor outside the container to edit code; any changes will be mirrored inside the container. Due to a limitation of the CLI, only port 8080 (the Web UI) will be exposed for you to access if you run this way. Other services are usable from the shell inside.- GitHub Codespaces. You can start editing as soon as VSCode is open, though you may want to wait for

pre-build.shto finish installing dependencies, building binaries, and setting up the cluster before running any commands in the terminal. Once you start running services (see next steps below), you can click on the "PORTS" tab in the VSCode terminal to see all forwarded ports. You can open the Web UI in a new tab from there.

Once you have entered the container, continue to Developing Locally.

Note:

-

for Apple Silicon

- This platform can spend 3 times the indicated time

- Configure Docker Desktop to use BuildKit:

"features": { "buildkit": true }, -

For Windows WSL2

- Configure

.wslconfigto limit memory usage by the WSL2 to prevent VSCode OOM.

- Configure

-

For Linux

- Use Docker Desktop instead of Docker Engine to prevent incorrect network configuration by k3d.

Requirements¶

Clone the Git repo into: $GOPATH/src/github.com/argoproj/argo-workflows. Any other path will break the code generation.

Add the following to your /etc/hosts:

127.0.0.1 dex

127.0.0.1 minio

127.0.0.1 postgres

127.0.0.1 mysql

127.0.0.1 azurite

To build on your own machine without using the Dev Container you will need:

We recommend using K3D to set up the local Kubernetes cluster since this will allow you to test RBAC set-up and is fast. You can set-up K3D to be part of your default kube config as follows:

k3d cluster start --wait

Alternatively, you can use Minikube to set up the local Kubernetes cluster.

Once a local Kubernetes cluster has started via minikube start, your kube config will use Minikube's context

automatically.

Warning

Do not use Docker Desktop's embedded Kubernetes, it does not support Kubernetes RBAC (i.e. kubectl auth can-i always returns allowed).

Developing locally¶

To start:

- The controller, so you can run workflows.

- MinIO (http://localhost:9000, use admin/password) so you can use artifacts.

Run:

make start

Make sure you don't see any errors in your terminal. This runs the Workflow Controller locally on your machine (not in Docker/Kubernetes).

You can submit a workflow for testing using kubectl:

kubectl create -f examples/hello-world.yaml

We recommend running make clean before make start to ensure recompilation.

If you made changes to the executor, you need to build the image:

make argoexec-image

You can use the TARGET_PLATFORM environment variable to compile images for specific platforms:

# compile for both arm64 and amd64

make argoexec-image TARGET_PLATFORM=linux/arm64,linux/amd64

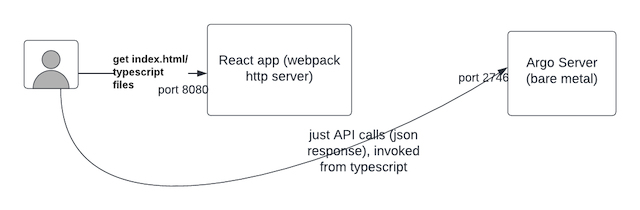

To also start the API on http://localhost:2746:

make start API=true

This runs the Argo Server (in addition to the Workflow Controller) locally on your machine.

To also start the UI on http://localhost:8080 (UI=true implies API=true):

make start UI=true

If you are making change to the CLI (i.e. Argo Server), you can build it separately if you want:

make cli

./dist/argo submit examples/hello-world.yaml ;# new CLI is created as `./dist/argo`

Although, note that this will be built automatically if you do: make start API=true.

To test the workflow archive, use PROFILE=mysql or PROFILE=postgres:

make start PROFILE=mysql

You'll have, either:

- Postgres on http://localhost:5432, run

make postgres-clito access. - MySQL on http://localhost:3306, run

make mysql-clito access.

To test SSO integration, use PROFILE=sso:

make start UI=true PROFILE=sso

Running E2E tests locally¶

Start up Argo Workflows using the following:

make start PROFILE=mysql AUTH_MODE=client STATIC_FILES=false API=true

If you want to run Azure tests against a local Azurite:

kubectl -n $KUBE_NAMESPACE apply -f test/e2e/azure/deploy-azurite.yaml

make start

Running One Test¶

In most cases, you want to run the test that relates to your changes locally. You should not run all the tests suites. Our CI will run those concurrently when you create a PR, which will give you feedback much faster.

Find the test that you want to run in test/e2e

make TestArtifactServer

Running A Set Of Tests¶

You can find the build tag at the top of the test file.

//go:build api

You need to run make test-{buildTag}, so for api that would be:

make test-api

Diagnosing Test Failure¶

Tests often fail: that's good. To diagnose failure:

- Run

kubectl get pods, are pods in the state you expect? - Run

kubectl get wf, is your workflow in the state you expect? - What do the pod logs say? I.e.

kubectl logs. - Check the controller and argo-server logs. These are printed to the console you ran

make startin. Is anything logged atlevel=error?

If tests run slowly or time out, factory reset your Kubernetes cluster.

Committing¶

Before you commit code and raise a PR, always run:

make pre-commit -B

Please do the following when creating your PR:

- Sign-off your commits.

- Use Conventional Commit messages.

- Suffix the issue number.

Examples:

git commit --signoff -m 'fix: Fixed broken thing. Fixes #1234'

git commit --signoff -m 'feat: Added a new feature. Fixes #1234'

Troubleshooting¶

- When running

make pre-commit -B, if you encounter errors likemake: *** [pkg/apiclient/clusterworkflowtemplate/cluster-workflow-template.swagger.json] Error 1, ensure that you have checked out your code into$GOPATH/src/github.com/argoproj/argo-workflows. - If you encounter "out of heap" issues when building UI through Docker, please validate resources allocated to Docker. Compilation may fail if allocated RAM is less than 4Gi.

- To start profiling with

pprof, passARGO_PPROF=truewhen starting the controller locally. Then run the following:

go tool pprof http://localhost:6060/debug/pprof/profile # 30-second CPU profile

go tool pprof http://localhost:6060/debug/pprof/heap # heap profile

go tool pprof http://localhost:6060/debug/pprof/block # goroutine blocking profile

Using Multiple Terminals¶

I run the controller in one terminal, and the UI in another. I like the UI: it is much faster to debug workflows than the terminal. This allows you to make changes to the controller and re-start it, without restarting the UI (which I think takes too long to start-up).

As a convenience, CTRL=false implies UI=true, so just run:

make start CTRL=false